Object Detection is the ability to detect multiple classes of objects and identify them within the best possible concise bounding boxes in an image, video, or live camera feed. Various algorithms to achieve object detection like R-CNN, Fast R-CNN, Faster R-CNN, SSD and YOLO.

Classification and Localization

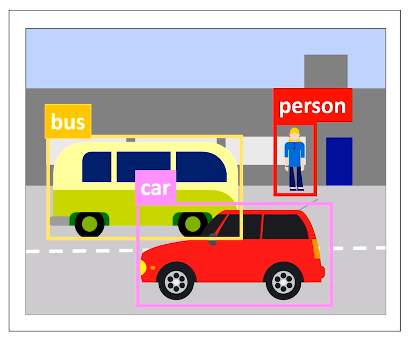

For example, let's classify vehicles and people in this image. Generally, the classification and location of multiple objects are known as object detection.

If this article could be summed up in an expression:

(Image Classification + Image Localization) = Object Detection

Fundamentally, the solution is to generate outputs that indicate the probabilities of the detected object to be a particular class and the location of the bounding box containing that object.

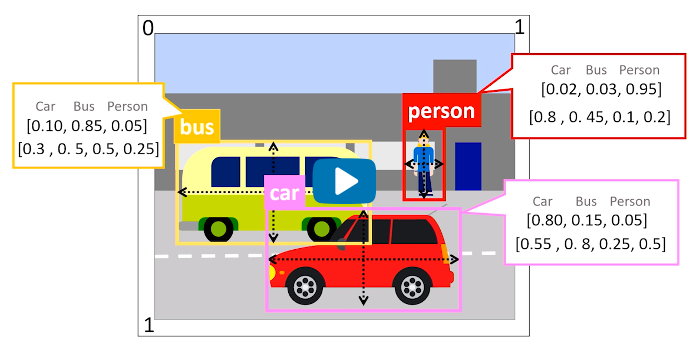

Here, we use a convention to denote the target locations of a detected object based on the presumed top-left coordinate (0,0) in the image and locations as X and Y coordinates for the centre of the bounding box.

Inspection of the Coordinates

Let's look at an image example of object detection.

To clarify, in the image:

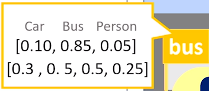

The first array of values [0.10, 0.85, 0.05] represents the generated probabilities of the detected object classes in a range (0-1) totalling 1. So the detected object was classified as a Car by 10%, Bus by 85% and Person by 5%. Hence the maximum probability of 85% being Bus was selected.

Similarly, the second array of values [0.3, 0.5, 0.5, 0.25] represents the width and height of the bounding box relative to the size of the overall image. So the centre of the bus is around 0.3 from the left of the image, 0.5 from the top, 0.5 as wide as the image and 0.25 as tall as the image.

Understanding the Process

To understand the object detection process, we need to know how to locate and classify multiple objects in an image. Out of various approaches, we take a look at some of the standard approaches.

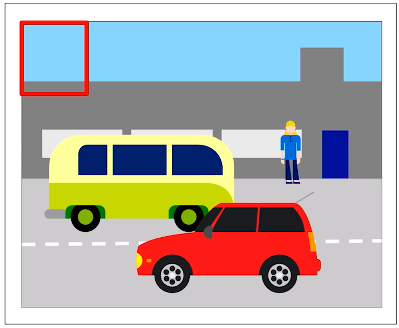

First, we apply a classification model to multiple regions of the same image. This can be achieved by using the Sliding Windows approach.

In this method, we usually create a square-shaped window and place it in a specific image region, mostly starting at the top left part like in the figure below.

Then, we feed this into a convolutional neural network to classify this patch of the image. We slide the window along to the neighbouring regions and continue the process to classify all of the window size regions in the image.

We can repeat the same process with different-sized windows or on the rescaled versions of the image size so that we can detect objects of varying sizes.

The only problem with this is that it is computationally impractical for a convolutional neural network to apply classifiers for every window position.

Thank you for reading. Please leave comments if you have any. Good day!