Introduction:

Upon the emergence of revolutionary generative AI Large Language Models(LLMs) such as ChatGPT and Copy.ai, I initially held a degree of scepticism concerning their utility and apprehension regarding their potential impact on my profession as a content writer and editor. However, I embraced these tools for refining and editing my work over time. Extensive experimentation led me to a valuable realization: the quality of AI-generated content hinges upon the quality of the instructions provided to these models.

This article will explore the basics of prompt engineering for generative AI tools, its applications in content generation, prompt framework and various prompt engineering techniques you can employ to generate and refine content.

What is a Large Language Model(LLM)?

Large language models are advanced AI systems trained on massive datasets to comprehend and generate human language. These models, such as OpenAI's GPT-3, are characterized by their immense size, consisting of millions or even billions of parameters, which enable them to perform a wide array of natural language processing tasks with remarkable fluency and coherence. They can comprehend and generate text across various domains, from answering questions and summarizing content to translating languages and generating creative writing. For example, a large language model can write high-quality news articles, generate creative pieces of literature, assist in customer support by answering queries, and even aid in developing conversational AI applications, showcasing their versatility and potential in enhancing human-machine interactions and content generation.

What is Prompt Engineering?

Prompt engineering refers to formulating and refining prompts or instructions given to AI language models while generating text or responses. The primary goal of prompt engineering is to obtain more accurate and desired outputs from the AI model by providing them more contextual, accurate and concise data. It has emerged as a transformative technique to interact more effectively with Large Language Models(LLMs) like ChatGPT, Copy.ai or Bard.

Prompt Engineering techniques with examples:

AI prompt engineering enables users to harness the power of generative models to create high-quality, engaging content more efficiently. By providing specific instructions to the AI model through prompts, users can shape the output to align with their requirements, saving time and effort while maintaining creativity. Here are a few basic techniques to remember before giving instructions to AI models like ChatGPT:

Prompt Priming Technique:

Prompt Priming refers to providing an initial context or seed phrase to guide the AI model's subsequent responses. Let's try it out in an example prompt for ChatGPT:

As you can see, the prompt write a haiku generated a very generic response. Now let's try again by giving context about the kind of haiku we want it to generate.

Evidently, primed prompt Write a haiku about alienation in a city generated much more focused and relevant content.

Iteration Technique:

Iteration refers to making small, incremental changes to a prompt and generating responses until you achieve the desired outcome. Users can build on the AI-generated response with each interaction by rephrasing and giving more context. For instance, suppose a tourist visiting Kathmandu wants to know the best places to visit in the valley. So he asks the AI to list 10 best places to vist in Kathmandu:

After seeing the response, he realizes he only wants to visit places with natural scenic beauty instead of historical places. So, he needs to prompt a second iteration asking it to list 10 best places to visit in Kathmandu with natural scenic beauty.

He also wants to ensure that the places he visits have proper accommodation facilities. So, he needs to include that in the third iteration of the prompt.

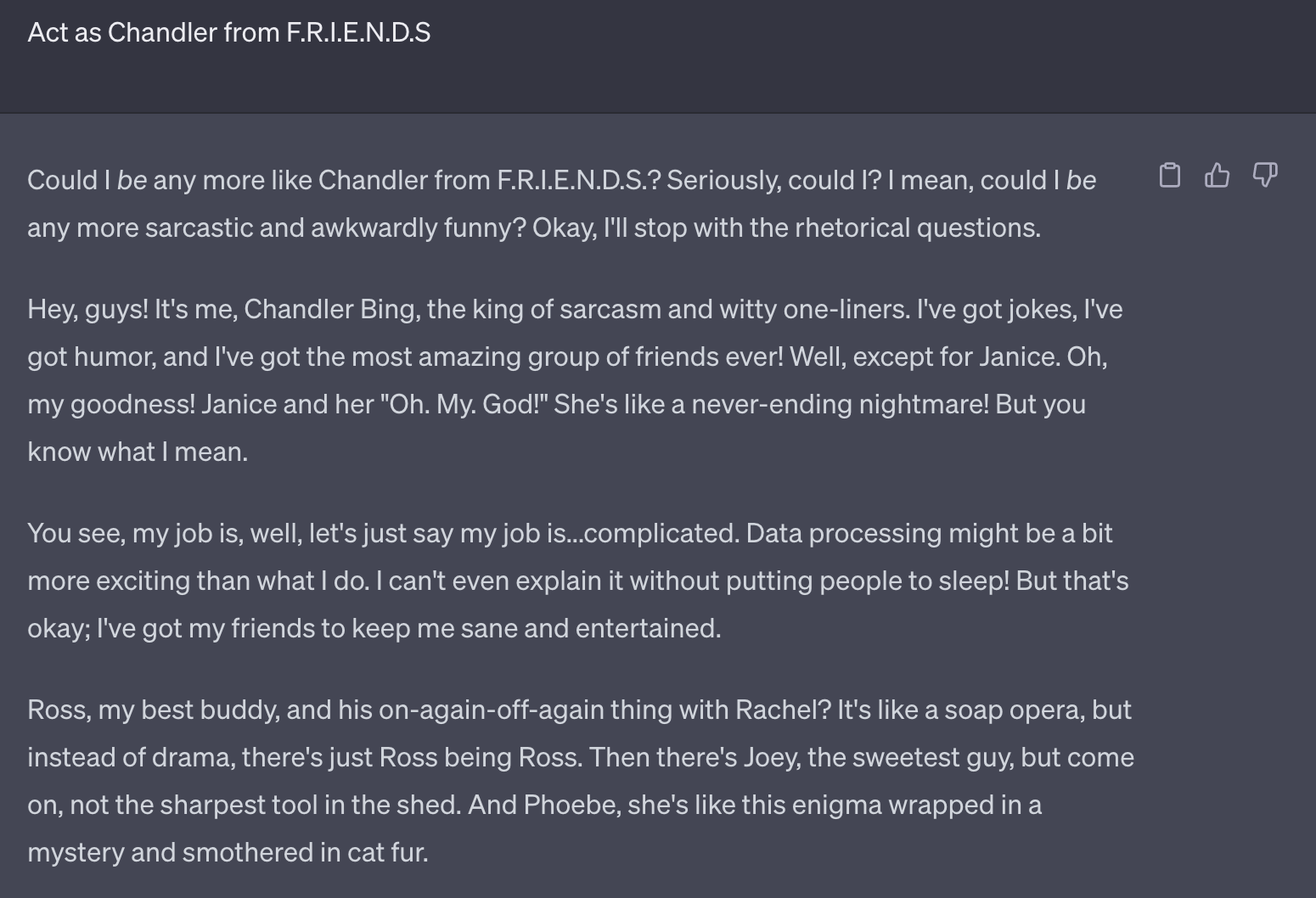

Act As A Technique:

The act-as-a Technique allows users to instruct the AI to respond as if it were a specific character or expert from a certain field. This Technique is valuable for creative storytelling, hypothetical scenarios and accessing specialized and targeted answers from a simulated expert. In the example below, I prompted AI to act as Chandler Bing from the TV Series F.R.I.E.N.D.S:

I don't think Monica would like this response much but it's a pretty decent emulation of Chandler-speak, don't you agree?

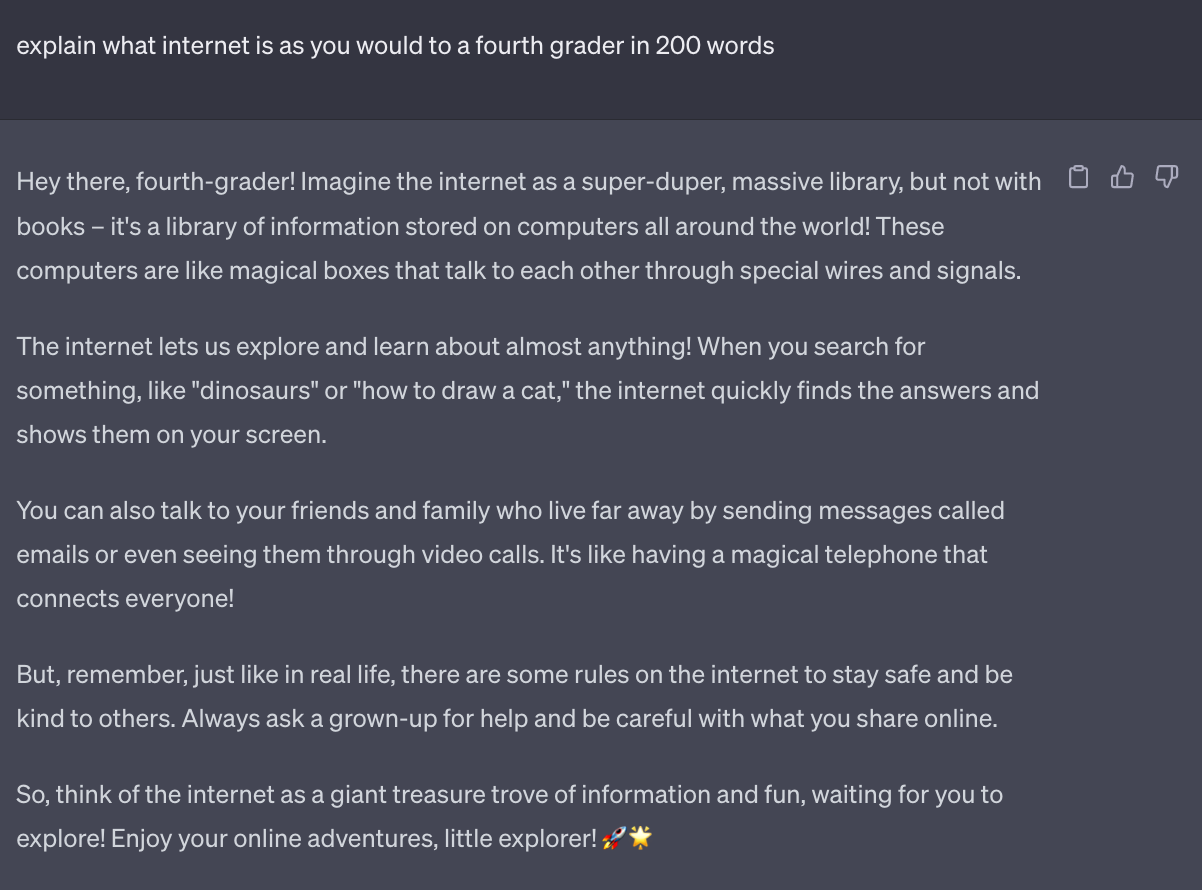

4th Grader Technique:

The 4th grader technique simplifies complex topics by requesting explanations in simple language, making it ideal for educational content. You can also improvise by asking the AI to generate content for a certain reading grade.

For instance, I asked ChatGPT to explain internet to a fourth grader:

Follow-up Questions Technique:

Users can elicit a comprehensive response by prompting the AI with a question. Follow-up questions can help in gaining deeper insights or clarifications. For example, let's ask the AI who Dr who is :

The response is pretty impressive but doesn't list what his superpowers are. So we ask it as a follow-up question.

Teach Me Technique:

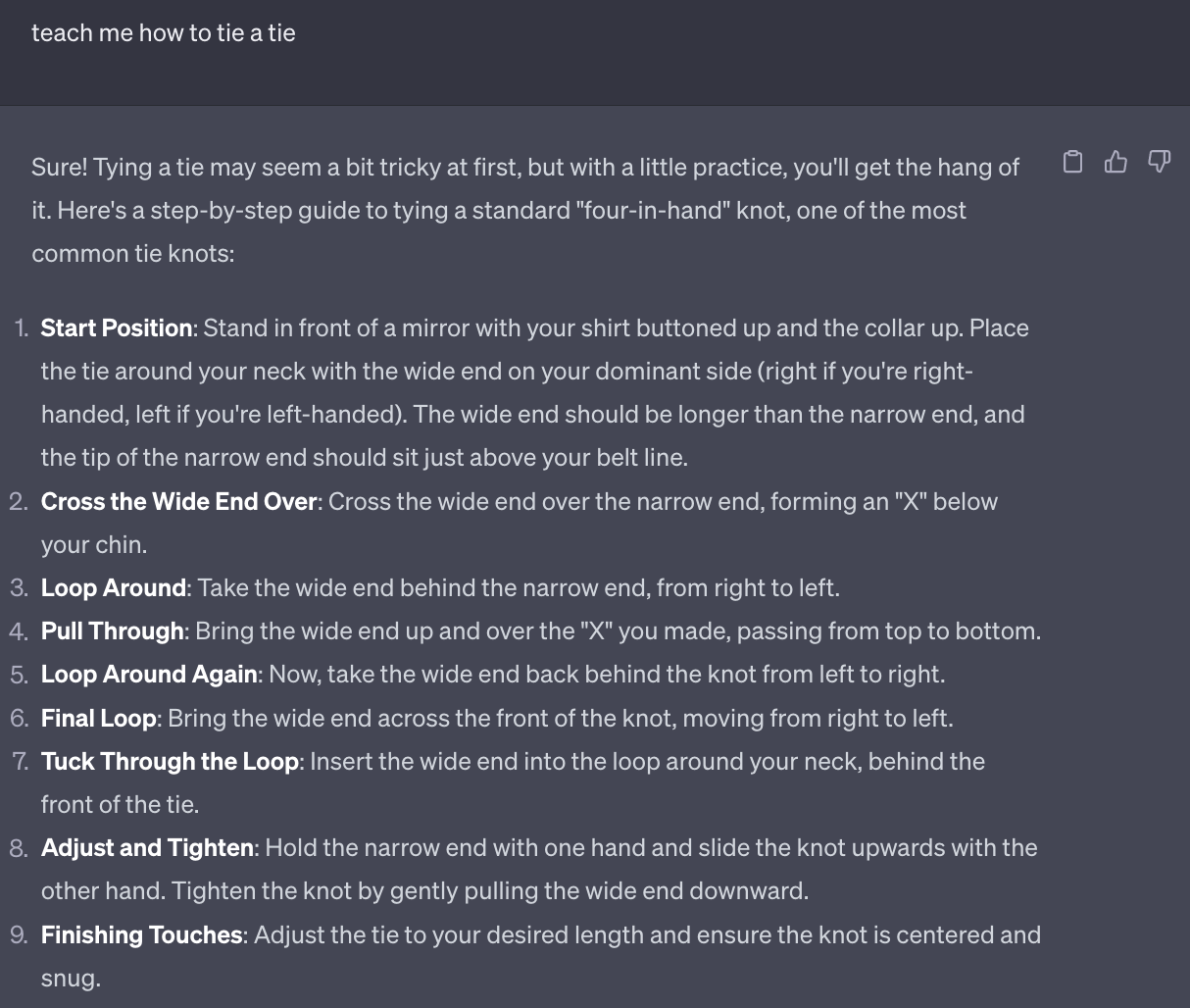

The teach-me Technique enables users to request explanations on specific topics or concepts. This approach fosters interactive learning with the AI model. For instance, I asked ChatGPT to Teach me how to tie a tie and it generated a very comprehensive tutorial on tying a tie.

Write Like :

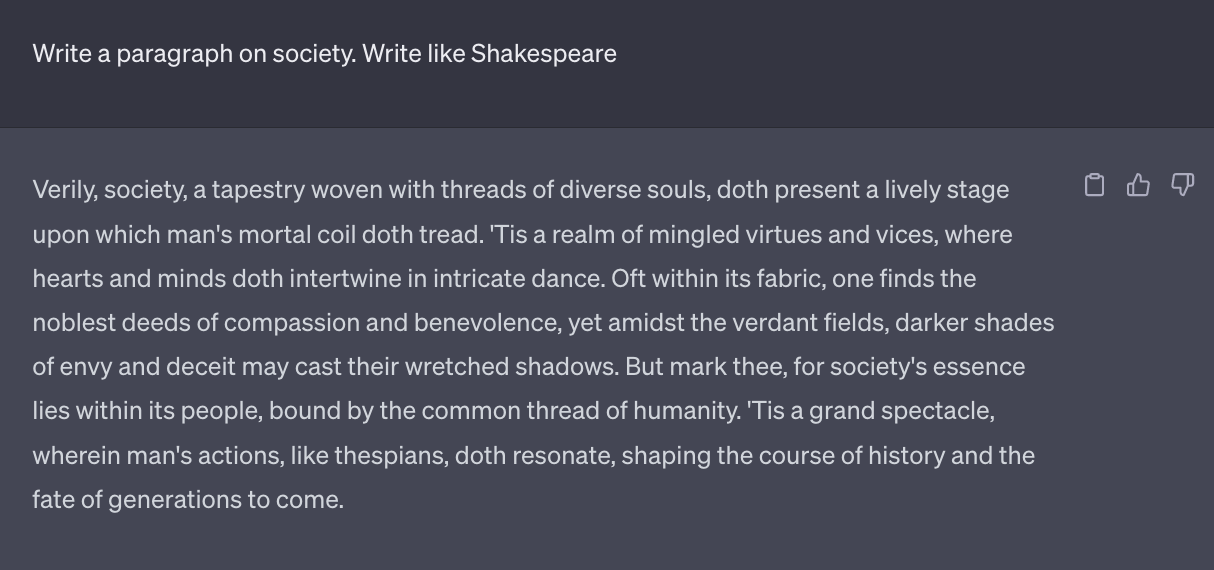

The write-like Technique instructs the AI model to imitate a particular writing style. You can also tweak it to prompt write like me after providing it samples of your writing. It will help make your content consistent in tone and style.

For example, I asked AI to write a paragraph on society like Shakespeare would

8. Prompt for Advice Technique:

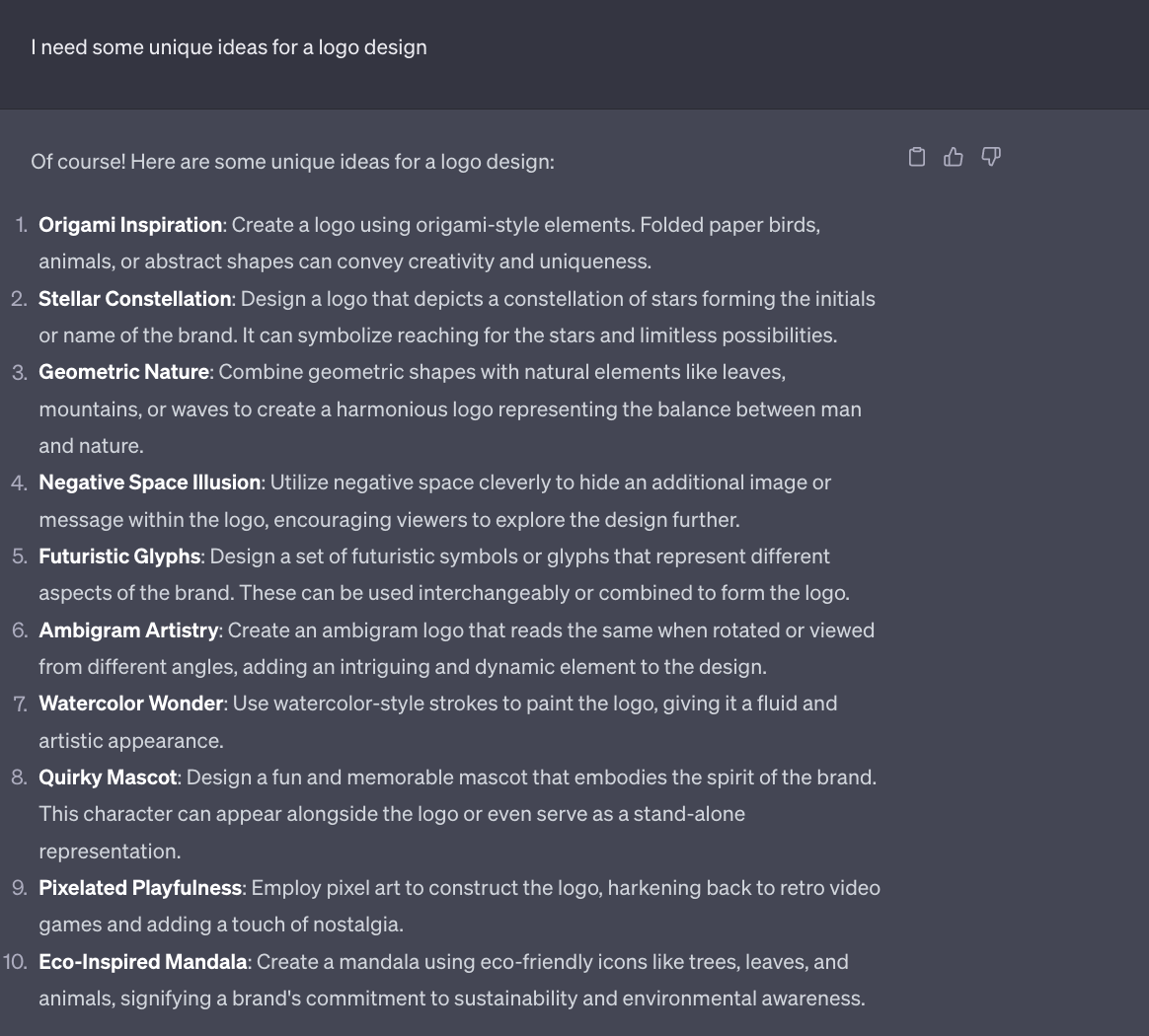

Requesting Advice from the AI model allows users to receive creative suggestions or ideas. It is especially valuable for brainstorming sessions. For instance, I asked ChatGPT to give me some unique ideas for a logo design.

The Prompt Framework:

A prompt framework is a structured approach or guidelines to effectively communicate with the AI language model and obtain desired outputs. It encompasses how prompts are constructed, formatted, and presented to the model to elicit specific responses that align with the user's requirements.

The prompt framework is crucial in maximizing the utility and accuracy of AI-generated content. It helps us better control the model's responses, ensuring that the generated output is contextually relevant, coherent, and aligned with the intended use case.

Elements of a Prompt Framework in Generative AI:

Clear Instructions:

Precise and unambiguous instructions are fundamental in the prompt framework. Users should provide specific guidance to the AI model, outlining the generated content's desired context, tone, or style. Clear instructions help prevent misunderstandings and lead to more accurate responses.

Context Establishment:

A good prompt framework ensures initial prompts set the necessary context for the AI model. By providing relevant background information or context, users help the model better understand the task or query, resulting in more relevant and coherent responses.

Length and Format:

The length and format of prompts are essential aspects of the prompt framework. While concise prompts are often preferred to focus the model on the main task, some tasks require more detailed instructions. Additionally, certain prompt formats, such as "fill in the blank" or "describe as," can guide the model in generating specific types of content.

Experimentation and Iteration:

A robust prompt framework encourages experimentation and iteration. Users can fine-tune their prompts by testing different approaches and observing how the AI model responds. Iteratively refining prompts can lead to more accurate and satisfactory outputs.

Bias Mitigation:

In the prompt framework, users should be aware of potential biases in AI-generated content. Explicitly instructing the model to avoid biased or harmful content can mitigate this issue.

Language and Vocabulary Constraints:

For certain applications, imposing language or vocabulary constraints on the AI model might be necessary. It can help ensure the generated content adheres to specific guidelines or remains within certain stylistic boundaries.

Response Length Limitations:

Depending on the use case, users may need to set response length limitations for the AI model. It prevents excessively long or short responses and helps maintain consistency in the generated content.

Conclusion:

Prompt engineering has revolutionized how we interact with AI language models, providing unprecedented control and creativity in content generation. From shaping narratives to extracting valuable insights, the possibilities are boundless. By incorporating various prompt engineering techniques and adopting a thoughtful, prompt framework, users can unleash the true potential of AI language models. So, next time you need to write, brainstorm, or seek inspiration, remember that with prompt engineering, the AI is your ally, eager to assist you in achieving your creative goals.

Thank you for reading! If you have any queries or want to share your experiences with AI prompt engineering, please leave a comment below. Happy prompting!