Two distinct approaches to handling test data come into play in software testing: Data-Driven Testing and Manual Data-Input Testing. While both serve the purpose of validating software functionality, they differ significantly in their methodologies and the efficiency they bring to the testing process.

Data-Driven Testing

Data-driven testing is a methodology in which a single test script is designed to execute with multiple input data sets. The focus is on reusability and scalability, as many test scenarios can be executed using a standardized test script.

The test data varies from valid to invalid inputs and is often stored in external repositories like databases, excel, or CSV files. Fake data generators such as Faker and Mockaroo can also generate massive amounts of data in just one function. This approach enhances test coverage, reduces redundancy, and efficiently detects errors under diverse conditions.

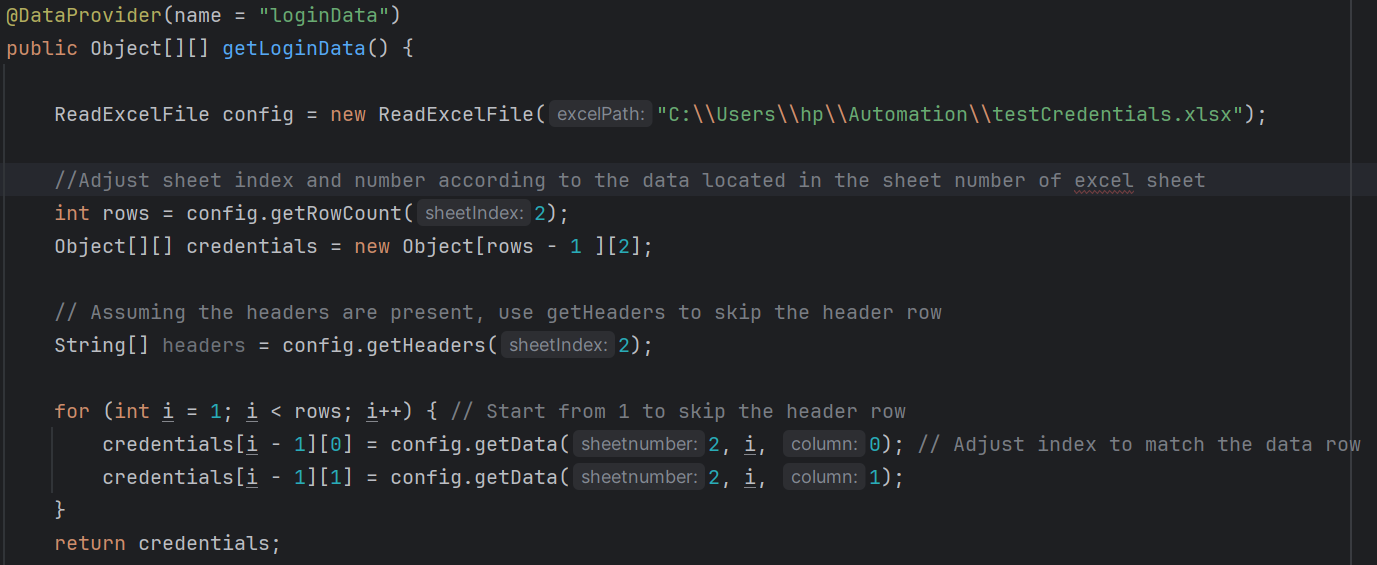

The above Java code defines a TestNG data provider method named getLoginData, which retrieves login credentials from an Excel file for parameterized testing.

The code uses a custom class, ReadExcelFile, to read data from the specified Excel file path. It determines the number of rows in the Excel sheet, initializes a 2D array to store login credentials, and populates the array by iterating through the rows, skipping the header. The method then returns the 2D array, providing varied login data for use in test cases annotated with @Test(dataProvider = "LoginData").

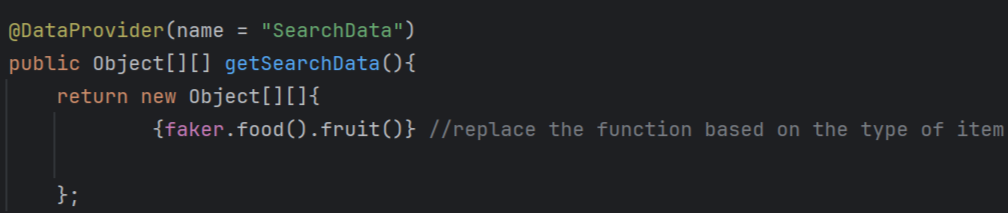

The provided code snippet uses the Faker library to create a TestNG data provider method named getSearchData. It initializes a Faker instance and generates random fruit names using faker.food().fruit() for five sets of search data. The method constructs a 2D array containing these random search terms, allowing diverse and randomized input to be supplied to test cases annotated with @Test(dataProvider = "SearchData").

Manual Data-Input Testing

On the other hand, in manual input testing, testers manually execute test cases by entering specific sets of input data into the application. This involves using manual input methods, such as "send keys" in Selenium, to simulate user interactions. Testers observe how the application responds under various scenarios. Although this approach provides a hands-on testing experience, it is more labour-intensive and time-consuming because each unique test data set necessitates a dedicated test case. Unlike automated solutions, manual input testing lacks the scalability and efficiency of automation tools.

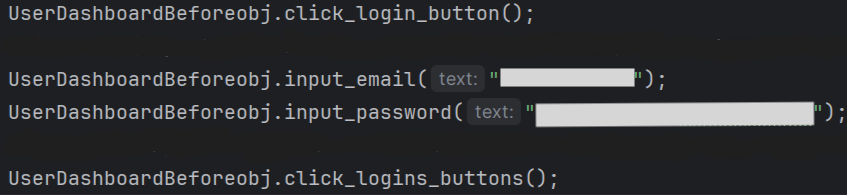

The above code is used to simulate user actions in a test scenario using an object named UserDashboardBeforeobj. First, it clicks on a login button using the method click_login_button. Then, it inputs a specific email address, "exampleemail@email.com" using the method input_email. Following that, it inputs a password, "*****************" using the method input_password. Finally, it clicks on a login button again through the method click_logins_buttons.

Essentially, the code automates the process of clicking login buttons, entering an email, entering a password, and triggering a login action for interacting with a user dashboard

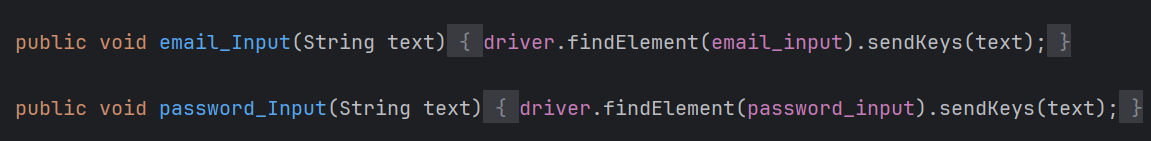

The above code uses Selenium WebDriver to define two methods (email_Input and password_Input) for automating text input into email and password input fields on a web page. These methods take a parameter (text), representing the data to be entered and using Selenium's findElement and specific locators to locate the corresponding input fields. The sendKeys method is then employed to simulate manual data input.

Distinguishing Factors Among Data-Driven and Manual-Input Testing

- Automation:

- Data-driven testing is automated, using a single script to run multiple test scenarios with different input data.

- Manual Data-Input Testing relies on manual execution, where testers manually input data for each test case.

- Test Coverage:

- Data-driven testing provides broader coverage as one script can be reused with diverse data sets, covering many scenarios.

- Manual Data-Input Testing is limited as it relies on manual data input for each test case, potentially resulting in less comprehensive coverage.

- Efficiency:

- Data-driven testing is more efficient due to automation, scalability, and the ability to identify errors across various conditions.

- Manual Data-Input Testing can be time-consuming and may lead to human errors, impacting overall efficiency.

- Reusability:

- Data-driven testing encourages the reuse of test scripts, making maintenance and updates easier as the application evolves.

- Manual Data-Input Testing requires unique test cases for each input data set, resulting in less reusability.

- Ease of Data Entry:

- Simplifying data entry, data-driven testing efficiently generates numerous test data with a data generator, easing the process and reducing manual effort.

- On the other hand, manual data-input testing requires handwritten data, which can be a tedious and time-consuming task, particularly for extensive datasets.

Conclusion

Both Data-Driven Testing and Manual Data-Input Testing have their places in software testing, and the right choice depends on the testing goals, project needs, and the desired level of automation.

Data-driven testing excels in efficiency, scalability, and covering a wide range of scenarios, making it great for modern testing. Conversely, manual input testing offers a more hands-on, exploratory approach suitable for situations where manual validation is essential. The decision between these approaches comes down to the unique requirements and objectives of the testing process.

Thank you for reading this article. Catch you in the next one! 👋🏾