Software is inherently socio-technical and is not about being a 'hero developer' or another Han Solo-style loner messiah. It is about a collective endeavour from a team. In these terms, one better way of developing software could be, in itself, doing it and helping others do it.

It is 2023, and some adolescent companies are still helpless and alone, doing crud with crud business logic for half a year. Pun highly intended. And yes, they are still calling themselves a professional software development team.

Some others, on the other hand, also helpless and alone, go big on server-expense increasing technologies too early on in the name of 'scaling up' or being 'scalable'. For instance, there are startups where GraphQL and its subscriptions are The Only Way™ or The Best Practice™ regardless of the cost-overhead it brings along. Stacks that provide GraphQL server technologies seem especially prone to this. This is so prevalent that even AWS's own Amplify does not bother to promote a cheaper solution for users needing real-time and reactive UI updates for CRUD & Search.

Cloud services that dumb down things too much risk attracting developers whose ambitions and understanding of the underlying technology itself are also, in turn, towards the left of the bell curve. In this sense, it is usually not 'the best tool for the job' maxim but rather the newest and shiniest that is also in use at or blessed by the FAANG or the Fortune 500s.

As for those in the former group, manually writing CRUD API endpoints, ZenStack, GraphQL, tRPC, Platformatic, et al., do not matter or even register as viable solutions for saving 100s of hours of basic grunt work.

Whether we lose money on our servers or on our bloated total person-hours, we are still losing real money despite real solutions that we do not yet know. Instead, we can use a library or a framework that has given enough thought to these routine time-taking issues. Or else, build it. It’s not difficult, apparently.

Reactive, in some contexts, could also have a bigger meaning as it can be a reference to the reactive manifesto or meant in the same vein in tech parlance. Teaching about some of these pledges of the overall software industry also seems to be an effective way of ‘helping others do it’. In doing so, we start sharing a ubiquitous language, à la Eric Evans' Domain Driven Design, but especially on the level of core technical domain topics of day-to-day enterprise problems as opposed to the system-specific business domain. In helping a team develop software, some clues about our own behaviour can be seen in the software that we make.

Conway’s law and communication patterns aside, even our behavioural patterns, habits, and traditions are reflected in the way we design and publish software. For instance, due to my personal and academic inclinations towards anarchy, I try to think of clients as peers with capabilities regardless of whether there is a centralized data source. Thus, despite having a client-server style duplex or half-duplex network, my systems have so far been “peer to peer” in the sense of decentralized controls and permissions-sharing peers.

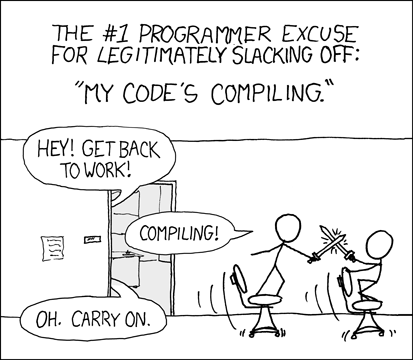

Aside from structural patterns, there could be anti-patterns that arise from the work ethic of people in a team. A popular behavioural anti-pattern is to make excuses to keep waiting inactively while someone else’s work is done. This, along with xkcd’s sword-fight style CI/CD wait, is among the top tongue-in-cheek excuses for being lazy at work I have known over the years. Patterns of incompetence are easy to enact or spot, but communication patterns are still relatively hard to reason about and encode as software.

Some network requests are already too late by the sheer virtue of distances, network speeds and processing speeds of intermediary devices. Nevertheless, as developers, we do try to provide a UI with at least a convincing illusion that everything is happening right away. This does not mean creating a highly complex architecture that creates magic with time illusions. It also does not mean rolling out some ‘simple’ CRUD alone with haphazardly planned web-socket gateways and REST endpoints with sizable network calls. Ironically, software I/O and compute latency are higher with such software. Such a ‘simple’ output is simply wrong.

In this series, we will explore how we can transition away from being a team always figuring out how to manually do all that a bettter team just automates on day one. This is usually achieved by abstracting repetitive parts of our codebase instinctively. Getting CRUD, Search, and Auth-related usual concerns out of the way allows us to better focus on our domain and business logic.

We will look at state-of-the-art server CRUD API solutions like ElectricSQL, ZenStack, GraphQL, tRPC, and Platformatic and at our home-grown tool, PrismQL. We will then examine various ways to navigate your enterprise system architecture journey regarding common persistence, authorization, and retrieval concerns. All of these tools and patterns simplify many day-to-day enterprise software tasks and can immensely reduce our project's time requirements and financial overhead.

Simply CURSED, For Too Long

The aforementioned simple for a small company, or even just a small-minded large company, is about the bare minimum they actually know how to make and hype up. Simplicity or minimalism is not always a grand design's outcome or that of some Herculean code and design cleanup act. It is a mask for their limitations in competence and lack of managerial skills.

Even when everyone in a team is capable enough to do their parts, the whole software is a social effort that may not succeed overall. Success is hard to deliver when your project leads don’t understand the need for craftsmanship with comprehensive specifications, roadmaps, tests, abstractions, and libraries. Do they prioritize removing redundant work, or, say, acknowledge why APIs need to evolve over time and not just look like how they used to when we did object-less and type-less PHP as a baby dinosaur? We won't be ready to embrace the last 10 years of software innovations and remain stuck in the good ol' glory decades before that from our nostalgia for simpler times.

You could have leadership that keeps justifying making a laughable ‘MVP’ with just CRUD operations because, till then, business logic is sparse, and nobody has to worry about actually defining behavioural specifications. Effectively, if you waste your company’s time just doing admin ‘dashboards’ that could have come out of the box, you do not have to show any competence as a project lead in defining behaviour specs and core innovations. With software and not physical products, this could be understandable some of the times: to start with crud and to get better incrementally. However, even when starting simple, the ambitions can be set at least a little higher than merely our eye height. This is simply not the case for many IT shops in Nepal.

Getting by with the bare minimum effort is organizationally incentivized in our region of the world. It is painful to see this happen even to physical products and not just software. In one relatively recent case during my consulting phase, the ‘simple’ product that got rushed into the market had no competitive advantage over even locally produced, cheaper products. But the leadership justified it with “we would be even more delayed if we added all those features”.

Previously, we had mentioned a recurring motif: a habit of blocked-waiting, e.g., claiming some APIs to not be ‘fully ready’ and thus not working on the UI even with mocks. Combined with this excuse of rushing a ‘simple’ aka incompetent product to the market might together kill some companies here, especially when the product has external dependencies, roadmaps, acceptance requirements and time frames.

In a well-thought-out architecture, with common infrastructure services already ready for general usage, business logic is just another layer that can be added to the right places in the overall cause-effect event loop. To achieve this, one does not necessarily need Aspect Oriented Programming style point-cuts and join-points as more widely available patterns and features like interceptors, middle-ware, decorators and effect systems can handle the above 'adding to the right places'.

Furthermore, one does not necessarily need something with an event loop like Node.js or Vert.x since the same or at least similar can be achieved with most frameworks with an event dispatcher + event handler pattern. For PrismQL, we had to support multiple languages and frameworks, starting with at least:

- C# with Rx,

- Unity with C# and optionally Rx,

- Typescript with RxJS and/or Events and Promises,

- Go with Echo or Beego

Thus, for us, being able to use one general-purpose API with no-nonsense CRUD and idiomatic SDKs for different languages and frameworks was an essential metric when designing PrismQL intermittently over the last two months. This also helped organize business logic better than in-lining it with CRUD logic. Such granularity and separation of concerns in our modules simplified our tests and increased our test-feedback-based iteration velocity.

These insights, among others, helped us ‘uncover better ways of doing it’, and we would like to ‘help others do it’ by actually lifting the curse of having to write just CURSED operations for months or years. That aspect of work (pun not intended this time) would already have been automated if the teams were using mature tooling.

In one case, something like Platformatic, Postgrest, Postgraphile, GraphQL, tRPC, Spring Data Repository, jHipster or even Django did not exist as options for a team of young developers. Despite seeing how these tools worked, the technical leadership simply turned a blind eye to these tools and continued working with decades-old patterns of manually writing crud code for every entity and relation.

This meant DTOs, DAOs/Repositories, services, controllers, unit tests, integration and e2e tests were all redundantly written for every new entity and relation as their domain grew in size. In month one, a typical project could have around 30-50 database tables or collections. But, in a year, it can have more than 10x that number as features, scopes and analytics are added.

A common phrase thrown around by the leadership to explain avoiding new technology choices was, “over-engineering is too much of a risk” when referring to these or similar relatively newer tools. But ironically, it was never over-engineering when the grind of a crud CRUD continued for months or years instead of just days or weeks.

Beyond ‘simple’ is anything we don’t get right away, thus ‘overengineering’.

CURSED-less, like Server-less

For a usual software codebase with calls to essential open-source software, effectively, there are many more people outside the company contributing towards that company’s software development and future updates than those inside it. These OSS devs have solved interesting common engineering challenges if we are using their tools. Thus, they actually might understand what over-engineering really means. That is why we took advice from wise devs in some of the best engineering shops worldwide that regularly contribute to OSS infra services that we commonly use. We learned that, in the Prisma universe – which is beyond just an ORM ecosystem – there are opportunities for reducing a lot of ‘over-engineering’.

Prisma provides the single source of truth about all of our domain models. Each entity’s attributes and relations can be introspected by a client or a backend dev. This can be used for making more informed decisions about the data model as a whole, as opposed to each field in response separately.

Moreover, Prisma has what it calls ‘DMMF’ or the Data Model Meta Format (AST-as-JSON) and helpful tools like the @prisma/generator-helper. We have been using these a lot to generate boilerplate code on the UI side, especially components that need to work with CRUD logic.

For instance, you need to create a ‘project’, but in your domain model, a ‘project’ needs to be a part of an organization. You can decide to create a form with a searchable dropdown for ‘organizations’ and/or a way to create a new organization inline or with a modal dialog, as applicable. Imagine our software has anywhere from 50 to 500 tables, which is usual for enterprise software. Would we like to go through this exercise of creating a CRUD–form every time? Yes, we would, if we do not get that software is about automation and needs to at least try to usually be DRY.

Could this be done with TypeORM, another TypeScript-based ‘typed’ ORM? Perhaps it could. But it would not be as elegant and tiny as the code is for Prisma because TypeORM does not expose a simple-to-use abstract syntax tree in one place for all the entities and relations in the domain, among other things.

In our company, which experiments with tech, as it is in our very name, my predecessor had already built a code generator and generic CRUD API for any entity using TypeORM. TypeScript 5’s decorators and emulating dependent or conditional types using a type map and/or literal types were missing features back when our first WET-CRUD-killer in TypeORM came out.

Currently, with Prisma, these two features of TypeScript are the saving grace to keep our abstract code and advanced type-level gymnastics to a bare minimum. Prisma’s own innovation is also in creating an elegant but JSON-based interface. This could be just exposed over REST to provide powerful type-safe CRUD with aggregation, grouping and counting operations, including even Postgres full-text search – for trusted internal clients. So these CURSED REST APIs and admin dashboards for projects of all sizes are, for us, solved problems.

But wait, there’s more.

Our tooling can now be used to generate and update SvelteKit and Remix components for our desktop app and web app, respectively. It is indeed a time saver. But why stop there? Prisma’s universe of libraries helps us with custom documentation and DBML generation and even helps with the cache update and eviction logic, as our domain model is but a tree of dependencies on the cache level.

Everything, The 42nd Time

How far can we take this idea? I like to say that it can do everything in general but nothing in particular. RESTful API endpoints are good at one thing in particular. Generic APIs are, well, generic. They need to be told which entity and query, mutation, or subscription the data payload refers to. Such dependent typing or even just conditional generic types are not supported by Open API tools like Swagger, Rapidoc or Redoc. So we built our own add-ons for that too.

It was also quickly discovered by my trainee and now a colleague, Suman, that our low-code setup can also be used as-is for building a UI to interactively and safely guide new developers in updating the schema itself.

As we already have a REST API generator for any valid prisma.schema file, a new developer can create new API endpoints by merely manipulating UML drawings on a guided, step-by-step UI that runs safe Prisma migrations. Prisma now has client extensions to add functionality to your models, result objects, and queries.

Also, since the incoming requests are not directly sent off to Prisma, we can even add objects not specified by the Prisma schema into the requests and make PrismQL work beyond Prisma on REST – with the same syntax:

- for Svelte/Kit and Remix/React component generators,

- for a search engine query (tested on Solr),

- for a cache read, evict, update and to enqueue/dequeue jobs (tested on Redis),

- for an object store (tested on AWS S3, Minio),

- for queries on a graph-based semantic knowledge base (tested on TypeDB) and with Apache Spark

- for a metaobject that helps with tests, audits, debugging and changing log-levels per request

As someone from a knowledge management and semantics background, I do not like JSON much and would gladly switch away when better formats are available. However, semantic annotation of data models is not happening on or with JSON in this system. What we are calling ‘Typed-JSON’ in PrismQL is just a type-checked carrier of data payload for REST-style state transfers, along with declarative data queries and data-transforms. This is not much of a departure from RESTful APIs, except basic REST does not allow state-transforms, just representational state transfers. Subtle difference.

This was a feature, not a bug, that helped REST win against the likes of the incumbent WSDL services with convoluted SOAP operas. This time around, we are sticking to RESTfulness in most regards, like Roy Fielding would want. The few places we go towards not being fully RESTful are usually because we do not just have hypermedia links that can be followed to make the state of the system change. We also prefer safe and sanitized SQL state transform representations written in (typed) JSON. The rest of it is still fully RESTful in the original sense.

I have built such ‘everything’ libraries for persistence, cache, and search in different languages across my relatively short career. It is fun working on top of other powerful OSS tools and getting help from great OSS devs. This time around, it seems like what we have with PrismQL is not just a low-code tool but rather a new ‘language’. This language is useful precisely because it is not a Turing-complete language that does all that a Turing machine can do.

PrismQL helps us and others replace hundreds if not thousands of lines of mundane boilerplate code for working with the DB, object store, cache, and search engine and generating basic UI elements.

So Long, and Thanks for All The Fish!

To recap, we have many services, like the one exposed by Prisma, that execute almost all kinds of SQL queries in day-to-day enterprise usage without much overhead from the rest of the backend. With something like this in place, one can ensure a smooth API surface for all CRUD-like (create, update, read, search, evict, delete) persistence operations on the database, cache layer, search engine, and object-store.

All queries, mutations, and subscriptions in our proposed setting apply to the server-cache layer, full-text search engines and object stores as well. This makes adding new features all about writing new JSON requests, not more backend code, reducing product costs and time to market.

All queries are hashed and kept as key-value pairs in-memory for the TTL number of seconds for fast retrievals. The query language has a uniform grammar and API for DB, object store, cache, and search.

Lastly, because this is an architectural refactor with a small footprint, you too could replicate and add this to your own codebase over a weekend or two. Soon after, you can have schema and data-driven generators using Prisma's generator for 'everything'. Some initial usages in our team are geared towards:

- headless UI components & routes

- search queries and headless UI components for search

- usual cache management operations and hooks

- abstracting away the object store API for PrismQL API Users

- AI/ML/Knowledge Engineering-related abstractions integrated into CRUD-like operations

- generating, reporting and running QA and security tests

That's all for now. Catch you in the next one and till then, discover more CLUEs!